What Is Deepseek V2

With Improvements In Efficacy, Performance, And Affordability, Deepseek V2 Has Become A Noteworthy Milestone In The Quickly Changing Field Of Artificial Intelligence (Ai). Deepseek V2, Which Was Released In May 2024, Differs From Its Predecessors And Contemporaries Due To Its Creative Architectural Design And Training Approaches.

Important Elements And Architecture

The Mixture-Of-Experts (Moe) Architecture Used By Deepseek V2 Has 236 Billion Parameters In Total, Of Which 21 Billion Are Enabled For Each Token During Processing. By Optimising Computing Resources, This Architecture Enables The Model To Maintain High Performance. One Noteworthy Improvement Is The Use Of Multi-Head Latent Attention (Mla) To Increase The Context Window To 128,000 Tokens. The Model Can Now Efficiently Handle And Produce Longer Text Sequences Thanks To This Improvement.

Methods Of Training

A Varied And High-Quality Corpus Of 8.1 Trillion Tokens Was Used For Pretraining In The Deepseek V2 Training Process, With A Purposeful Focus On Chinese Tokens, Which Made Up 12% More Than English Tokens. A Two-Stage Reinforcement Learning (Rl) Procedure Employing Generalised Proximal Policy Optimisation (Grpo) And Supervised Fine-Tuning (Sft) Came Next. While The Second Stage Of Rl Sought To Improve The Model’s Usefulness, Safety, And Adherence To Predetermined Rules, The First Stage Concentrated On Solving Mathematics And Coding Challenges.

Efficiency And Performance

Both Open-Ended Generation Tests And Common Benchmarks Have Shown That Deepseek V2 Performs Exceptionally Well. With A 42.5% Reduction In Training Expenses, A 93.3% Decrease In The Kv Cache, And A 5.76-Fold Increase In Maximum Generation Throughput, V2 Outperforms Its Predecessor, Deepseek 67b.

Variants And Later Publications

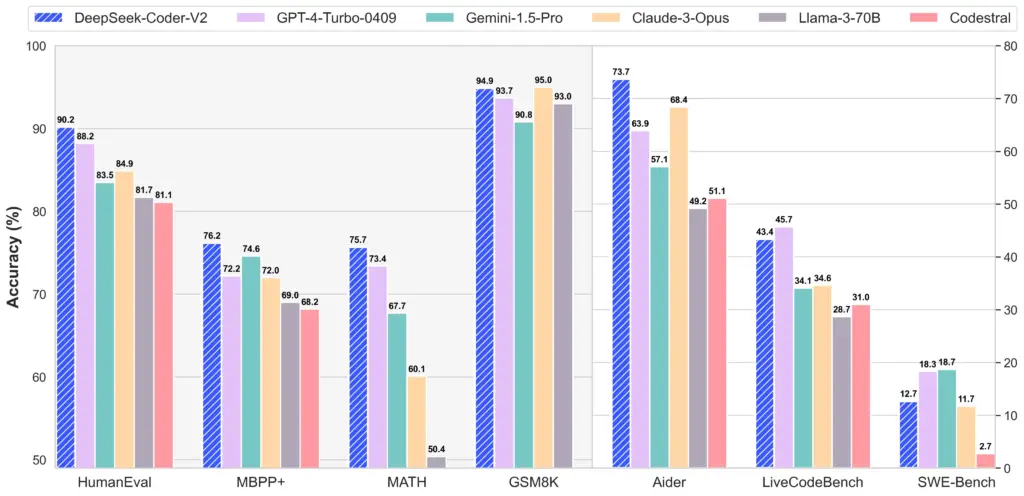

The Deepseek V2 Series Has Chatbot And Deepseek V2-Lite Versions In Addition To The Standard Unit. Although The Lite Chatbot Version Only Received Supervised Fine-Tuning Without Reinforcement Learning, The V2-Lite Models Are Lower In Scale And Were Trained In A Similar Manner. The Deepseek-Coder-V2 Series, Which Focusses On Code Intelligence And Includes Models Such As V2-Base, V2-Lite-Base, V2-Instruct, And V2-Lite-Instruct, Was Published In June 2024. These Models’ Coding And Mathematics Problem-Solving Skills Were Improved By Further Pretrained And Adjusted Models.

Effects On The Ai Sector

The Ai Sector Has Been Significantly Impacted By The Introduction Of Deepseek V2, Which Has Called Into Question The Idea That The Field Can Only Be Dominated By Big Tech Companies With Enormous Financial Resources. Its Economical Creation And Effective Operation Have Upended Investment Ideas And Demonstrated That Smaller Businesses Can Contend With More Established Tech Behemoths.

In Conclusion

Deepseek V2, Which Combines Creative Architectural Design With Effective Training Approaches To Attain High Performance In An Economical Manner, Represents A Significant Step In Ai Development. Its Result Highlights The Possibility Of More Affordable And Accessible Methods For Ai Research And Development.

FAQs

What Is Deepseek V2’s Architecture?

During Processing, 21 Billion Of The 236 Billion Parameters In Deepseek V2’s Mixture-Of-Experts (Moe) Architecture Are Engaged For Each Token.

Deepseek V2 Was Trained In What Way?

Prior To Supervised Fine-Tuning And A Two-Stage Reinforcement Learning Method That Focused On Mathematical, Coding, And General Helpfulness Tasks, The Model Was Pretrained On A Corpus Of 8.1 Trillion Tokens.

What Are The Main Ways That Deepseek V2 Is Better Than Its Predecessor?

In Comparison To Deepseek 67b, Deepseek V2 Provides Better Performance, A 42.5% Decrease In Training Expenses, A 93.3% Reduction In Kv Cache Size, And A Maximum Generation Throughput Improvement Of Up To 5.76 Times.

What Is Deepseek V2’s Context Window Size?

Thanks To Multi-Head Latent Attention (Mla), The Model Has A Larger Context Window Of 128,000 Tokens.

How Has Deepseek V2 Affected The Artificial Intelligence Sector?

The Effective And Economical Creation Of Deepseek V2 Has Put Giant Tech Companies’ Hegemony In Ai To The Test, Proving That Smaller Organisations Can Make Important Strides In The Field As Well.